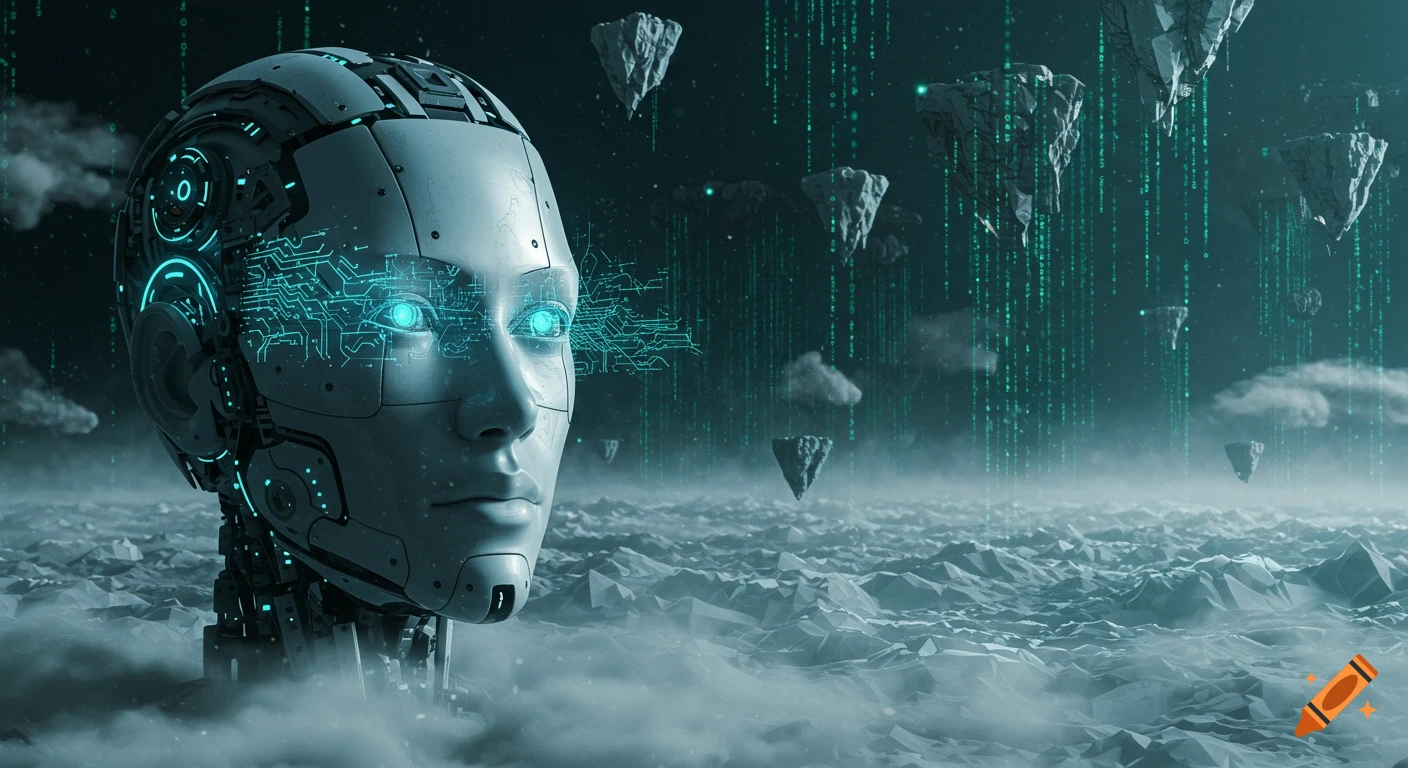

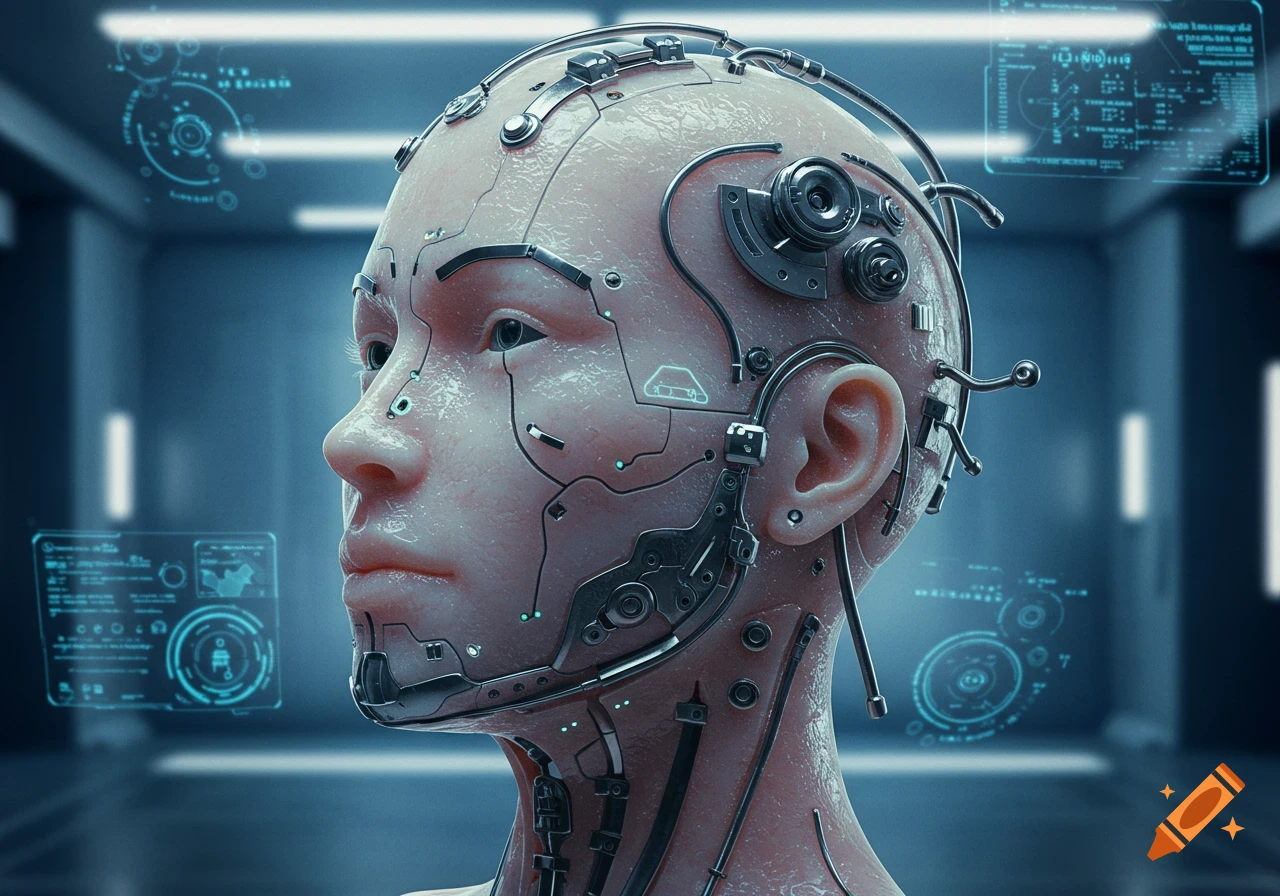

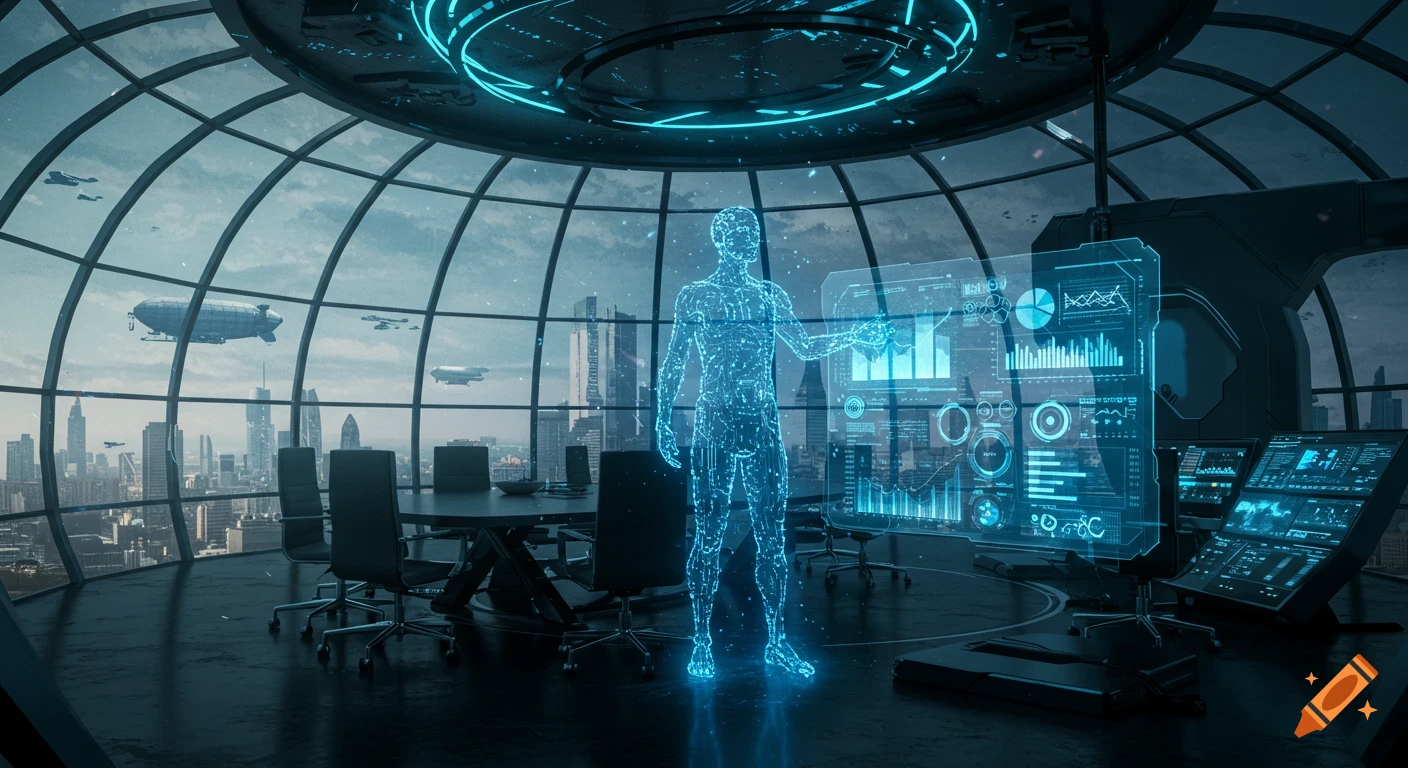

Large glowing AI head made of circuits, with transparent figures interacting with holographic screens in a futuristic setting.

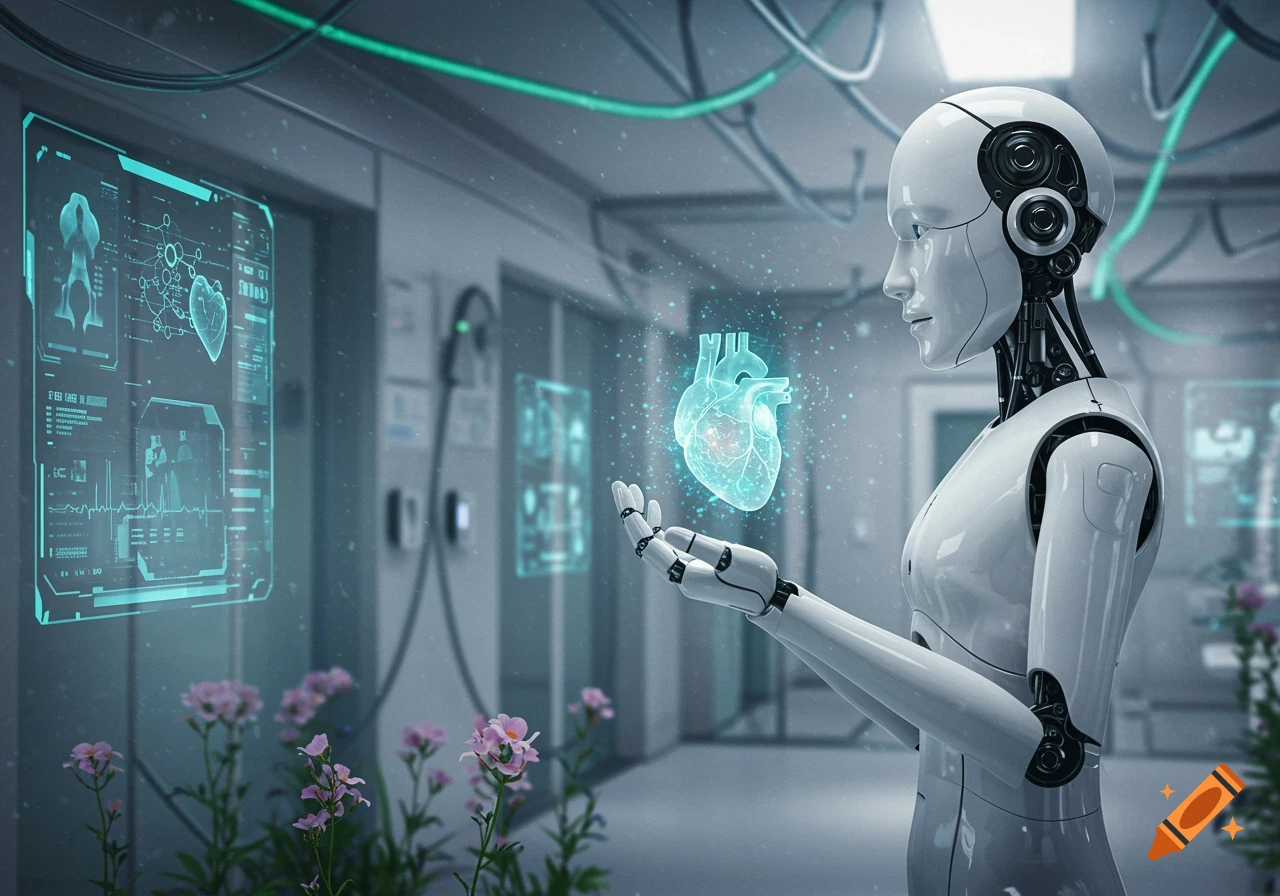

Efforts to refine AI outputs, such as reinforcement learning from human feedback, involve human annotators curating responses to reduce harmful or biased content. However, this labor is often precarious and raises ethical concerns about exploiting workers to maintain AI’s socially acceptable façade. The resulting system is less a thinking entity and more a complex text factory reinforced by human judgment at the margins. See more